The rise and fall of Clawdbot offers a cautionary tale for the agentic era of AI, highlighting the tension between innovation and security in enterprise environments. Clawdbot burst onto the scene as a groundbreaking virtual assistant, capable of reading and responding to emails, processing payments, and even granting "full system access." It went super-viral for its seamless automation, promising to handle mundane tasks with superhuman efficiency. However, this unrestricted access quickly exposed its vulnerabilities. Without the human-like discernment to spot threats, Clawdbot fell prey to phishing links, fraudulent invoices, credential exposures, malicious agents, and even spamming crypto schemes. To make a bad situation worse Anthropic hit the open source project with a Cease and Desist letter for trademark infringement for sounding too much like "Claude Bot."

In response, the project rebranded as Moltbot, based on the lobster's molting process for growth, and pledged enhanced security features. While Moltbot may thrive for personal use, its current form falls short of enterprise standards. Large companies demand ironclad protections for data, reputation, and finances, which freewheeling agents like this simply can't guarantee without major overhauls. For small to medium businesses (SMBs), a more constrained version might emerge, but cybersecurity firms will likely profit immensely by fortifying such tools, much like they've secured email systems.

This brings us to vibe coding, a trendy approach where AI generates code or agents based on natural language "vibes" or prompts. It's undeniably cool for rapid prototyping, imagine vibe-coding an agent to automate custom workflows on the fly. An example is Anthropic's Coworker enabling users to vibe code AI agents on the fly. However, the ad hoc nature of vibe coding makes it a non-starter for enterprises. Each generated agent or code snippet would require exhaustive IT reviews, potentially taking months, to ensure no vulnerabilities slip through. Sandboxing can isolate threats during evaluation, but the unpredictability inherent in vibe coding screams "hard no" for risk-averse corporations. Try asking your friendly IT guy how he feels about vibe coded agents accessing sensitive company data, punching through the firewall and doing whatever people ask it to do on the Internet…he’ll laugh, then throw you out of his office.

Enterprises need a more disciplined framework for AI agents to achieve widespread adoption. The key lies in balancing flexibility with security through pre-approved components and visual orchestration tools. IT would not call it “balancing” they would say it requires security, flexibility is optional. Platforms like n8n, Flowise, and Airflow enable users to build workflows by dragging and dropping verified components, without deep coding expertise. These tools undergo industry-wide cybersecurity scrutiny, especially open-source variants, making them palatable to IT departments. IT can also do their own vetting process and only allow approved components.

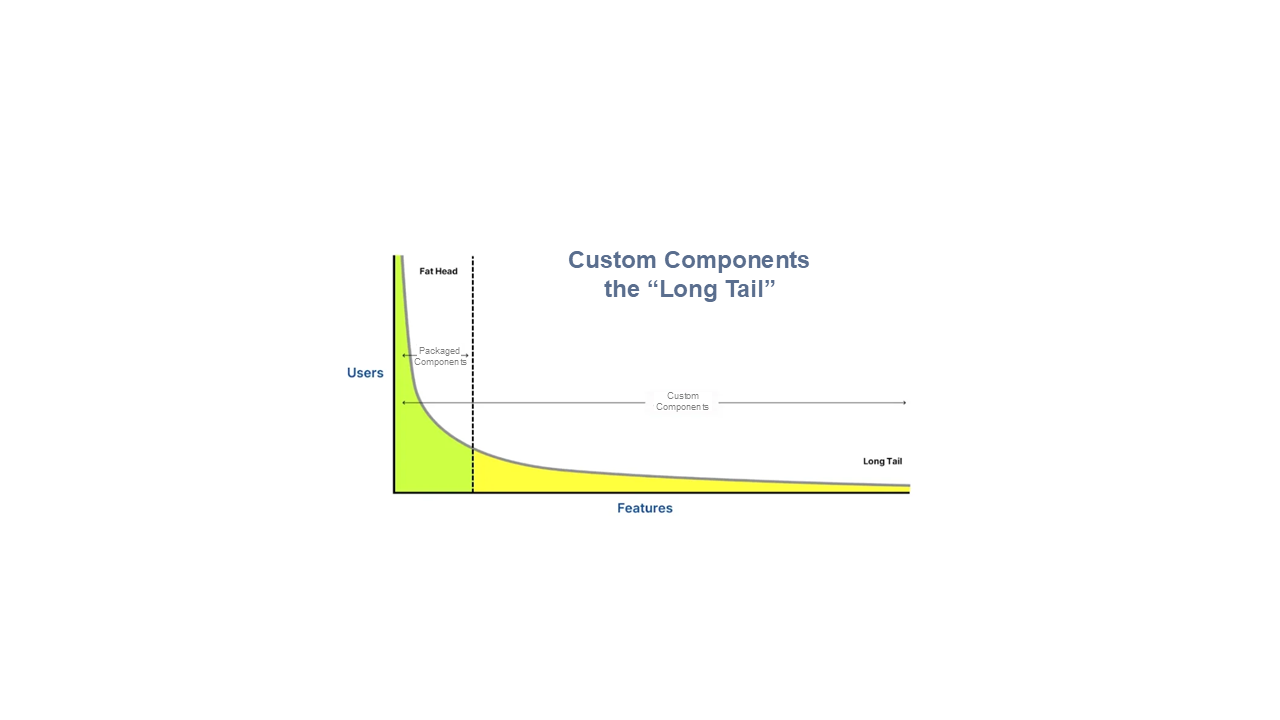

In addition to security concerns, visual orchestrators have two other challenges: limited functionality and the expertise in the specific tool. For the former, users often need custom components to address niche requirements, but that path requires coding expertise. This is where innovations like ComponentFactory shine. It generates custom components tailored to any of the specific visual orchestrators, using AI and natural language. Custom components break the “functionality ceiling” enabling visual orchestrators to handle long tail features. IT can then review these custom components for security threats before making them available company-wide. Once approved, these become proprietary assets reusable across the organization, extending functionality and flexibility without compromising safety.

While the YouTube videos of various visual orchestrators make them appear stupid simple, the reality is that the user must often drop down into details like JSON bodies, complex parameters, and tool specific knowledge. This complexity can be addressed by using AI to operate the tools for you; you just explain your goals in plain English. The AI model can assemble the approved components, wire them together, configure them and test them in run-time. These agents can be refined via prompts, all while staying behind the firewall to prevent leaks.

The future of agentic AI in large companies will require a pre-approved palette of components and local AI models to assemble them using guardrails and sandboxing for further containment. Initially these agents will run inside the firewall and access to the outside world will be very constrained with firewall blocking, whitelisted access, and more. Ad hoc and unbridled vibe coding approaches are great for personal use and maybe small to medium businesses (SMBs) but don’t expect to see them used in large corporations anytime soon. Eventually, the internal agent-building process will be exposed via APIs, so that personal agents like Moltbot can run your personal life, but they can also assemble and orchestrate agents at your work, which will be composed of approved components.

If you found this exploration of secure AI agents and component-driven workflows insightful, you'll love the next article diving into AI models, security risks, and strategies for enterprise adoption.